- Machine Learning Basics

- Machine Learning - Home

- Machine Learning - Getting Started

- Machine Learning - Basic Concepts

- Machine Learning - Python Libraries

- Machine Learning - Applications

- Machine Learning - Life Cycle

- Machine Learning - Required Skills

- Machine Learning - Implementation

- Machine Learning - Challenges & Common Issues

- Machine Learning - Limitations

- Machine Learning - Reallife Examples

- Machine Learning - Data Structure

- Machine Learning - Mathematics

- Machine Learning - Artificial Intelligence

- Machine Learning - Neural Networks

- Machine Learning - Deep Learning

- Machine Learning - Getting Datasets

- Machine Learning - Categorical Data

- Machine Learning - Data Loading

- Machine Learning - Data Understanding

- Machine Learning - Data Preparation

- Machine Learning - Models

- Machine Learning - Supervised

- Machine Learning - Unsupervised

- Machine Learning - Semi-supervised

- Machine Learning - Reinforcement

- Machine Learning - Supervised vs. Unsupervised

- Machine Learning Data Visualization

- Machine Learning - Data Visualization

- Machine Learning - Histograms

- Machine Learning - Density Plots

- Machine Learning - Box and Whisker Plots

- Machine Learning - Correlation Matrix Plots

- Machine Learning - Scatter Matrix Plots

- Statistics for Machine Learning

- Machine Learning - Statistics

- Machine Learning - Mean, Median, Mode

- Machine Learning - Standard Deviation

- Machine Learning - Percentiles

- Machine Learning - Data Distribution

- Machine Learning - Skewness and Kurtosis

- Machine Learning - Bias and Variance

- Machine Learning - Hypothesis

- Regression Analysis In ML

- Machine Learning - Regression Analysis

- Machine Learning - Linear Regression

- Machine Learning - Simple Linear Regression

- Machine Learning - Multiple Linear Regression

- Machine Learning - Polynomial Regression

- Classification Algorithms In ML

- Machine Learning - Classification Algorithms

- Machine Learning - Logistic Regression

- Machine Learning - K-Nearest Neighbors (KNN)

- Machine Learning - Naïve Bayes Algorithm

- Machine Learning - Decision Tree Algorithm

- Machine Learning - Support Vector Machine

- Machine Learning - Random Forest

- Machine Learning - Confusion Matrix

- Machine Learning - Stochastic Gradient Descent

- Clustering Algorithms In ML

- Machine Learning - Clustering Algorithms

- Machine Learning - Centroid-Based Clustering

- Machine Learning - K-Means Clustering

- Machine Learning - K-Medoids Clustering

- Machine Learning - Mean-Shift Clustering

- Machine Learning - Hierarchical Clustering

- Machine Learning - Density-Based Clustering

- Machine Learning - DBSCAN Clustering

- Machine Learning - OPTICS Clustering

- Machine Learning - HDBSCAN Clustering

- Machine Learning - BIRCH Clustering

- Machine Learning - Affinity Propagation

- Machine Learning - Distribution-Based Clustering

- Machine Learning - Agglomerative Clustering

- Dimensionality Reduction In ML

- Machine Learning - Dimensionality Reduction

- Machine Learning - Feature Selection

- Machine Learning - Feature Extraction

- Machine Learning - Backward Elimination

- Machine Learning - Forward Feature Construction

- Machine Learning - High Correlation Filter

- Machine Learning - Low Variance Filter

- Machine Learning - Missing Values Ratio

- Machine Learning - Principal Component Analysis

- Machine Learning Miscellaneous

- Machine Learning - Performance Metrics

- Machine Learning - Automatic Workflows

- Machine Learning - Boost Model Performance

- Machine Learning - Gradient Boosting

- Machine Learning - Bootstrap Aggregation (Bagging)

- Machine Learning - Cross Validation

- Machine Learning - AUC-ROC Curve

- Machine Learning - Grid Search

- Machine Learning - Data Scaling

- Machine Learning - Train and Test

- Machine Learning - Association Rules

- Machine Learning - Apriori Algorithm

- Machine Learning - Gaussian Discriminant Analysis

- Machine Learning - Cost Function

- Machine Learning - Bayes Theorem

- Machine Learning - Precision and Recall

- Machine Learning - Adversarial

- Machine Learning - Stacking

- Machine Learning - Epoch

- Machine Learning - Perceptron

- Machine Learning - Regularization

- Machine Learning - Overfitting

- Machine Learning - P-value

- Machine Learning - Entropy

- Machine Learning - MLOps

- Machine Learning - Data Leakage

- Machine Learning - Resources

- Machine Learning - Quick Guide

- Machine Learning - Useful Resources

- Machine Learning - Discussion

Machine Learning - Mean-Shift Clustering

The Mean-Shift clustering algorithm is a non-parametric clustering algorithm that works by iteratively shifting the mean of a data point towards the densest area of the data. The densest area of the data is determined by the kernel function, which is a function that assigns weights to the data points based on their distance from the mean. The kernel function used in Mean-Shift clustering is usually a Gaussian function.

The steps involved in the Mean-Shift clustering algorithm are as follows −

Initialize the mean of each data point to its own value.

For each data point, compute the mean shift vector, which is the vector that points towards the densest area of the data.

Update the mean of each data point by shifting it towards the densest area of the data.

Repeat steps 2 and 3 until convergence is reached.

The Mean-Shift clustering algorithm is a density-based clustering algorithm, which means that it identifies clusters based on the density of the data points rather than the distance between them. In other words, the algorithm identifies clusters based on the areas where the density of the data points is highest.

Implementation of Mean-Shift Clustering in Python

The Mean-Shift clustering algorithm can be implemented in Python programming language using the scikit-learn library. The scikit-learn library is a popular machine learning library in Python that provides various tools for data analysis and machine learning. The following steps are involved in implementing the Mean-Shift clustering algorithm in Python using the scikit-learn library −

Step 1 − Import the necessary libraries

The numpy library is used for scientific computing in Python, while the matplotlib library is used for data visualization. The sklearn.cluster library contains the MeanShift class, which is used for implementing the Mean-Shift clustering algorithm in Python.

The estimate_bandwidth function is used to estimate the bandwidth of the kernel function, which is an important parameter in the Mean-Shift clustering algorithm.

import numpy as np import matplotlib.pyplot as plt from sklearn.cluster import MeanShift, estimate_bandwidth

Step 2 − Generate the data

In this step, we generate a random dataset with 500 data points and 2 features. We use the numpy.random.randn function to generate the data.

# Generate the data X = np.random.randn(500,2)

Step 3 − Estimate the bandwidth of the kernel function

In this step, we estimate the bandwidth of the kernel function using the estimate_bandwidth function. The bandwidth is an important parameter in the Mean-Shift clustering algorithm, which determines the width of the kernel function.

# Estimate the bandwidth bandwidth = estimate_bandwidth(X, quantile=0.1, n_samples=100)

Step 4 − Initialize the Mean-Shift clustering algorithm

In this step, we initialize the Mean-Shift clustering algorithm using the MeanShift class. We pass the bandwidth parameter to the class to set the width of the kernel function.

# Initialize the Mean-Shift algorithm ms = MeanShift(bandwidth=bandwidth, bin_seeding=True)

Step 5 − Train the model

In this step, we train the Mean-Shift clustering algorithm on the dataset using the fit method of the MeanShift class.

# Train the model ms.fit(X)

Step 6 − Visualize the results

# Visualize the results

labels = ms.labels_

cluster_centers = ms.cluster_centers_

n_clusters_ = len(np.unique(labels))

print("Number of estimated clusters:", n_clusters_)

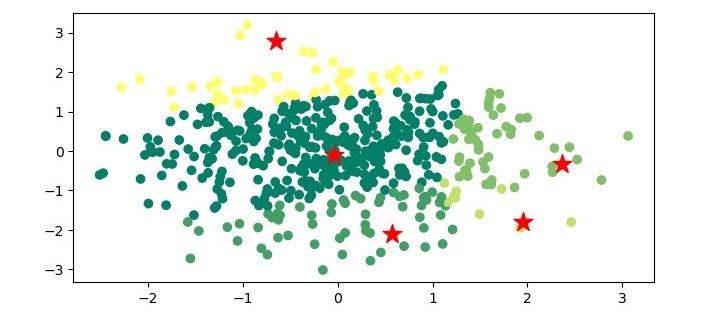

# Plot the data points and the centroids

plt.figure(figsize=(7.5, 3.5))

plt.scatter(X[:,0], X[:,1], c=labels, cmap='viridis')

plt.scatter(cluster_centers[:,0], cluster_centers[:,1], marker='*', s=300, c='r')

plt.show()

In this step, we visualize the results of the Mean-Shift clustering algorithm. We extract the cluster labels and the cluster centers from the trained model. We then print the number of estimated clusters. Finally, we plot the data points and the centroids using the matplotlib library.

Example

Here is the complete implementation example of Mean-Shift Clustering Algorithm in python −

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import MeanShift, estimate_bandwidth

# Generate the data

X = np.random.randn(500,2)

# Estimate the bandwidth

bandwidth = estimate_bandwidth(X, quantile=0.1, n_samples=100)

# Initialize the Mean-Shift algorithm

ms = MeanShift(bandwidth=bandwidth, bin_seeding=True)

# Train the model

ms.fit(X)

# Visualize the results

labels = ms.labels_

cluster_centers = ms.cluster_centers_

n_clusters_ = len(np.unique(labels))

print("Number of estimated clusters:", n_clusters_)

# Plot the data points and the centroids

plt.figure(figsize=(7.5, 3.5))

plt.scatter(X[:,0], X[:,1], c=labels, cmap='summer')

plt.scatter(cluster_centers[:,0], cluster_centers[:,1], marker='*',

s=200, c='r')

plt.show()

Output

When you execute the program, it will produce the following plot as the output −

Applications of Mean-Shift Clustering

The Mean-Shift clustering algorithm has several applications in various fields. Some of the applications of Mean-Shift clustering are as follows −

Computer vision − Mean-Shift clustering is widely used in computer vision for object tracking, image segmentation, and feature extraction.

Image processing − Mean-Shift clustering is used for image segmentation, which is the process of dividing an image into multiple segments based on the similarity of the pixels.

Anomaly detection − Mean-Shift clustering can be used for detecting anomalies in data by identifying the areas with low density.

Customer segmentation − Mean-Shift clustering can be used for customer segmentation in marketing by identifying groups of customers with similar behavior and preferences.

Social network analysis − Mean-Shift clustering can be used for clustering users in social networks based on their interests and interactions.