- Machine Learning Basics

- Machine Learning - Home

- Machine Learning - Getting Started

- Machine Learning - Basic Concepts

- Machine Learning - Python Libraries

- Machine Learning - Applications

- Machine Learning - Life Cycle

- Machine Learning - Required Skills

- Machine Learning - Implementation

- Machine Learning - Challenges & Common Issues

- Machine Learning - Limitations

- Machine Learning - Reallife Examples

- Machine Learning - Data Structure

- Machine Learning - Mathematics

- Machine Learning - Artificial Intelligence

- Machine Learning - Neural Networks

- Machine Learning - Deep Learning

- Machine Learning - Getting Datasets

- Machine Learning - Categorical Data

- Machine Learning - Data Loading

- Machine Learning - Data Understanding

- Machine Learning - Data Preparation

- Machine Learning - Models

- Machine Learning - Supervised

- Machine Learning - Unsupervised

- Machine Learning - Semi-supervised

- Machine Learning - Reinforcement

- Machine Learning - Supervised vs. Unsupervised

- Machine Learning Data Visualization

- Machine Learning - Data Visualization

- Machine Learning - Histograms

- Machine Learning - Density Plots

- Machine Learning - Box and Whisker Plots

- Machine Learning - Correlation Matrix Plots

- Machine Learning - Scatter Matrix Plots

- Statistics for Machine Learning

- Machine Learning - Statistics

- Machine Learning - Mean, Median, Mode

- Machine Learning - Standard Deviation

- Machine Learning - Percentiles

- Machine Learning - Data Distribution

- Machine Learning - Skewness and Kurtosis

- Machine Learning - Bias and Variance

- Machine Learning - Hypothesis

- Regression Analysis In ML

- Machine Learning - Regression Analysis

- Machine Learning - Linear Regression

- Machine Learning - Simple Linear Regression

- Machine Learning - Multiple Linear Regression

- Machine Learning - Polynomial Regression

- Classification Algorithms In ML

- Machine Learning - Classification Algorithms

- Machine Learning - Logistic Regression

- Machine Learning - K-Nearest Neighbors (KNN)

- Machine Learning - Naïve Bayes Algorithm

- Machine Learning - Decision Tree Algorithm

- Machine Learning - Support Vector Machine

- Machine Learning - Random Forest

- Machine Learning - Confusion Matrix

- Machine Learning - Stochastic Gradient Descent

- Clustering Algorithms In ML

- Machine Learning - Clustering Algorithms

- Machine Learning - Centroid-Based Clustering

- Machine Learning - K-Means Clustering

- Machine Learning - K-Medoids Clustering

- Machine Learning - Mean-Shift Clustering

- Machine Learning - Hierarchical Clustering

- Machine Learning - Density-Based Clustering

- Machine Learning - DBSCAN Clustering

- Machine Learning - OPTICS Clustering

- Machine Learning - HDBSCAN Clustering

- Machine Learning - BIRCH Clustering

- Machine Learning - Affinity Propagation

- Machine Learning - Distribution-Based Clustering

- Machine Learning - Agglomerative Clustering

- Dimensionality Reduction In ML

- Machine Learning - Dimensionality Reduction

- Machine Learning - Feature Selection

- Machine Learning - Feature Extraction

- Machine Learning - Backward Elimination

- Machine Learning - Forward Feature Construction

- Machine Learning - High Correlation Filter

- Machine Learning - Low Variance Filter

- Machine Learning - Missing Values Ratio

- Machine Learning - Principal Component Analysis

- Machine Learning Miscellaneous

- Machine Learning - Performance Metrics

- Machine Learning - Automatic Workflows

- Machine Learning - Boost Model Performance

- Machine Learning - Gradient Boosting

- Machine Learning - Bootstrap Aggregation (Bagging)

- Machine Learning - Cross Validation

- Machine Learning - AUC-ROC Curve

- Machine Learning - Grid Search

- Machine Learning - Data Scaling

- Machine Learning - Train and Test

- Machine Learning - Association Rules

- Machine Learning - Apriori Algorithm

- Machine Learning - Gaussian Discriminant Analysis

- Machine Learning - Cost Function

- Machine Learning - Bayes Theorem

- Machine Learning - Precision and Recall

- Machine Learning - Adversarial

- Machine Learning - Stacking

- Machine Learning - Epoch

- Machine Learning - Perceptron

- Machine Learning - Regularization

- Machine Learning - Overfitting

- Machine Learning - P-value

- Machine Learning - Entropy

- Machine Learning - MLOps

- Machine Learning - Data Leakage

- Machine Learning - Resources

- Machine Learning - Quick Guide

- Machine Learning - Useful Resources

- Machine Learning - Discussion

Machine Learning - BIRCH Clustering

BIRCH (Balanced Iterative Reducing and Clustering hierarchies) is a hierarchical clustering algorithm that is designed to handle large datasets efficiently. The algorithm builds a treelike structure of clusters by recursively partitioning the data into subclusters until a stopping criterion is met.

BIRCH uses two main data structures to represent the clusters: Clustering Feature (CF) and Sub-Cluster Feature (SCF). CF is used to summarize the statistical properties of a set of data points, while SCF is used to represent the structure of subclusters.

BIRCH clustering has three main steps −

Initialization − BIRCH constructs an empty tree structure and sets the maximum number of CFs that can be stored in a node.

Clustering − BIRCH reads the data points one by one and adds them to the tree structure. If a CF is already present in a node, BIRCH updates the CF with the new data point. If there is no CF in the node, BIRCH creates a new CF for the data point. BIRCH then checks if the number of CFs in the node exceeds the maximum threshold. If the threshold is exceeded, BIRCH creates a new subcluster by recursively partitioning the CFs in the node.

Refinement − BIRCH refines the tree structure by merging the subclusters that are similar based on a distance metric.

Implementation of BIRCH Clustering in Python

To implement BIRCH clustering in Python, we can use the scikit-learn library. The scikitlearn library provides a BIRCH class that implements the BIRCH algorithm.

Here is an example of how to use the BIRCH class to cluster a dataset −

Example

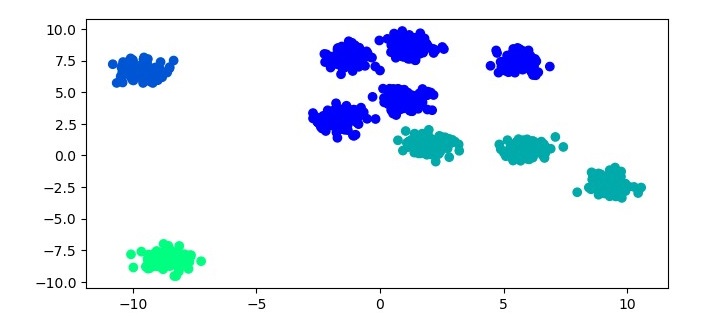

from sklearn.datasets import make_blobs from sklearn.cluster import Birch import matplotlib.pyplot as plt # Generate sample data X, y = make_blobs(n_samples=1000, centers=10, cluster_std=0.50, random_state=0) # Cluster the data using BIRCH birch = Birch(threshold=1.5, n_clusters=4) birch.fit(X) labels = birch.predict(X) # Plot the results plt.figure(figsize=(7.5, 3.5)) plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='winter') plt.show()

In this example, we first generate a sample dataset using the make_blobs function from scikit-learn. We then cluster the dataset using the BIRCH algorithm. For the BIRCH algorithm, we instantiate a Birch object with the threshold parameter set to 1.5 and the n_clusters parameter set to 4. We then fit the Birch object to the dataset using the fit method and predict the cluster labels using the predict method. Finally, we plot the results using a scatter plot.

Output

When you execute the given program, it will produce the following plot as the output −

Advantages of BIRCH Clustering

BIRCH clustering has several advantages over other clustering algorithms, including −

Scalability − BIRCH is designed to handle large datasets efficiently by using a treelike structure to represent the clusters.

Memory efficiency − BIRCH uses CF and SCF data structures to summarize the statistical properties of the data points, which reduces the memory required to store the clusters.

Fast clustering − BIRCH can cluster the data points quickly because it uses an incremental clustering approach.

Disadvantages of BIRCH Clustering

BIRCH clustering also has some disadvantages, including −

Sensitivity to parameter settings − The performance of BIRCH clustering can be sensitive to the choice of parameters, such as the maximum number of CFs that can be stored in a node and the threshold value used to create subclusters.

Limited ability to handle non-spherical clusters − BIRCH assumes that the clusters are spherical, which means it may not perform well on datasets with nonspherical clusters.

Limited flexibility in the choice of distance metric − BIRCH uses the Euclidean distance metric by default, which may not be appropriate for all datasets.