- Statistics Tutorial

- Home

- Adjusted R-Squared

- Analysis of Variance

- Arithmetic Mean

- Arithmetic Median

- Arithmetic Mode

- Arithmetic Range

- Bar Graph

- Best Point Estimation

- Beta Distribution

- Binomial Distribution

- Black-Scholes model

- Boxplots

- Central limit theorem

- Chebyshev's Theorem

- Chi-squared Distribution

- Chi Squared table

- Circular Permutation

- Cluster sampling

- Cohen's kappa coefficient

- Combination

- Combination with replacement

- Comparing plots

- Continuous Uniform Distribution

- Continuous Series Arithmetic Mean

- Continuous Series Arithmetic Median

- Continuous Series Arithmetic Mode

- Cumulative Frequency

- Co-efficient of Variation

- Correlation Co-efficient

- Cumulative plots

- Cumulative Poisson Distribution

- Data collection

- Data collection - Questionaire Designing

- Data collection - Observation

- Data collection - Case Study Method

- Data Patterns

- Deciles Statistics

- Discrete Series Arithmetic Mean

- Discrete Series Arithmetic Median

- Discrete Series Arithmetic Mode

- Dot Plot

- Exponential distribution

- F distribution

- F Test Table

- Factorial

- Frequency Distribution

- Gamma Distribution

- Geometric Mean

- Geometric Probability Distribution

- Goodness of Fit

- Grand Mean

- Gumbel Distribution

- Harmonic Mean

- Harmonic Number

- Harmonic Resonance Frequency

- Histograms

- Hypergeometric Distribution

- Hypothesis testing

- Individual Series Arithmetic Mean

- Individual Series Arithmetic Median

- Individual Series Arithmetic Mode

- Interval Estimation

- Inverse Gamma Distribution

- Kolmogorov Smirnov Test

- Kurtosis

- Laplace Distribution

- Linear regression

- Log Gamma Distribution

- Logistic Regression

- Mcnemar Test

- Mean Deviation

- Means Difference

- Multinomial Distribution

- Negative Binomial Distribution

- Normal Distribution

- Odd and Even Permutation

- One Proportion Z Test

- Outlier Function

- Permutation

- Permutation with Replacement

- Pie Chart

- Poisson Distribution

- Pooled Variance (r)

- Power Calculator

- Probability

- Probability Additive Theorem

- Probability Multiplecative Theorem

- Probability Bayes Theorem

- Probability Density Function

- Process Capability (Cp) & Process Performance (Pp)

- Process Sigma

- Quadratic Regression Equation

- Qualitative Data Vs Quantitative Data

- Quartile Deviation

- Range Rule of Thumb

- Rayleigh Distribution

- Regression Intercept Confidence Interval

- Relative Standard Deviation

- Reliability Coefficient

- Required Sample Size

- Residual analysis

- Residual sum of squares

- Root Mean Square

- Sample planning

- Sampling methods

- Scatterplots

- Shannon Wiener Diversity Index

- Signal to Noise Ratio

- Simple random sampling

- Skewness

- Standard Deviation

- Standard Error ( SE )

- Standard normal table

- Statistical Significance

- Statistics Formulas

- Statistics Notation

- Stem and Leaf Plot

- Stratified sampling

- Student T Test

- Sum of Square

- T-Distribution Table

- Ti 83 Exponential Regression

- Transformations

- Trimmed Mean

- Type I & II Error

- Variance

- Venn Diagram

- Weak Law of Large Numbers

- Z table

- Statistics Useful Resources

- Statistics - Discussion

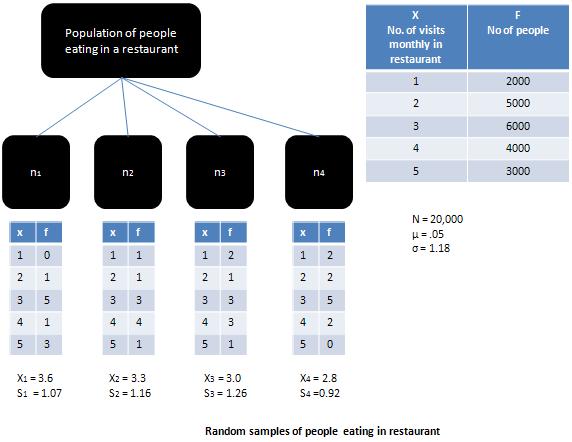

Statistics - Central limit theorem

If the population from which the sample has a been drawn is a normal population then the sample means would be equal to population mean and the sampling distribution would be normal. When the more population is skewed, as is the case illustrated in Figure, then the sampling distribution would tend to move closer to the normal distribution, provided the sample is large (i.e. greater then 30).

According to Central Limit Theorem, for sufficiently large samples with size greater than 30, the shape of the sampling distribution will become more and more like a normal distribution, irrespective of the shape of the parent population. This theorem explains the relationship between the population distribution and sampling distribution. It highlights the fact that if there are large enough set of samples then the sampling distribution of mean approaches normal distribution. The importance of central limit theorem has been summed up by Richard. I. Levin in the following words:

The significance of the central limit theorem lies in the fact that it permits us to use sample statistics to make inferences about population parameters without knowing anything about the shape of the frequency distribution of that population other than what we can get from the sample.