- Big Data Analytics Tutorial

- Big Data Analytics - Home

- Big Data Analytics - Overview

- Big Data Analytics - Characteristics

- Big Data Analytics - Data Life Cycle

- Big Data Analytics - Architecture

- Big Data Analytics - Methodology

- Big Data Analytics - Core Deliverables

- Big Data Adoption & Planning Considerations

- Big Data Analytics - Key Stakeholders

- Big Data Analytics - Data Analyst

- Big Data Analytics - Data Scientist

- Big Data Analytics Project

- Data Analytics - Problem Definition

- Big Data Analytics - Data Collection

- Big Data Analytics - Cleansing data

- Big Data Analytics - Summarizing

- Big Data Analytics - Data Exploration

- Big Data Analytics - Data Visualization

- Big Data Analytics Methods

- Big Data Analytics - Introduction to R

- Data Analytics - Introduction to SQL

- Big Data Analytics - Charts & Graphs

- Big Data Analytics - Data Tools

- Data Analytics - Statistical Methods

- Advanced Methods

- Machine Learning for Data Analysis

- Naive Bayes Classifier

- K-Means Clustering

- Association Rules

- Big Data Analytics - Decision Trees

- Logistic Regression

- Big Data Analytics - Time Series

- Big Data Analytics - Text Analytics

- Big Data Analytics - Online Learning

- Big Data Analytics Useful Resources

- Big Data Analytics - Quick Guide

- Big Data Analytics - Resources

- Big Data Analytics - Discussion

Big Data Analytics - Overview

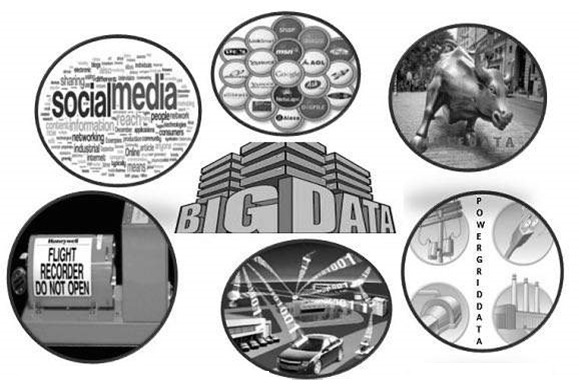

What is Big Data Analytics?

Gartner defines Big Data as “Big data is high-volume, high-velocity and/or high-variety information that demands cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.”

Big Data is a collection of large amounts of data sets that traditional computing approaches cannot compute and manage. It is a broad term that refers to the massive volume of complex data sets that businesses and governments generate in today's digital world. It is often measured in petabytes or terabytes and originates from three key sources: transactional data, machine data, and social data.

Big Data encompasses data, frameworks, tools, and methodologies used to store, access, analyse and visualise it. Technological advanced communication channels like social networking and powerful gadgets have created different ways to create data, data transformation and challenges to industry participants in the sense that they must find new ways to handle data. The process of converting large amounts of unstructured raw data, retrieved from different sources to a data product useful for organizations forms the core of Big Data Analytics.

Steps of Big Data Analytics

Big Data Analytics is a powerful tool which helps to find the potential of large and complex datasets. To get a better understanding, let's break it down into key steps −

Data Collection

This is the initial step, in which data is collected from different sources like social media, sensors, online channels, commercial transactions, website logs etc. Collected data might be structured (predefined organisation, such as databases), semi-structured (like log files) or unstructured (text documents, photos, and videos).

Data Cleaning (Data Pre-processing)

The next step is to process collected data by removing errors and making it suitable and proper for analysis. Collected raw data generally contains errors, missing values, inconsistencies, and noisy data. Data cleaning entails identifying and correcting errors to ensure that the data is accurate and consistent. Pre-processing operations may also involve data transformation, normalisation, and feature extraction to prepare the data for further analysis.

Overall, data cleaning and pre-processing entail the replacement of missing data, the correction of inaccuracies, and the removal of duplicates. It is like sifting through a treasure trove, separating the rocks and debris and leaving only the valuable gems behind.

Data Analysis

This is a key phase of big data analytics. Different techniques and algorithms are used to analyse data and derive useful insights. This can include descriptive analytics (summarising data to better understand its characteristics), diagnostic analytics (identifying patterns and relationships), predictive analytics (predicting future trends or outcomes), and prescriptive analytics (making recommendations or decisions based on the analysis).

Data Visualization

It’s a step to present data in a visual form using charts, graphs and interactive dashboards. Hence, data visualisation techniques are used to visually portray the data using charts, graphs, dashboards, and other graphical formats to make data analysis insights more clear and actionable.

Interpretation and Decision Making

Once data analytics and visualisation are done and insights gained, stakeholders analyse the findings to make informed decisions. This decision-making includes optimising corporate operations, increasing consumer experiences, creating new products or services, and directing strategic planning.

Data Storage and Management

Once collected, the data must be stored in a way that enables easy retrieval and analysis. Traditional databases may not be sufficient for handling large amounts of data, hence many organisations use distributed storage systems such as Hadoop Distributed File System (HDFS) or cloud-based storage solutions like Amazon S3.

Continuous Learning and Improvement

Big data analytics is a continuous process of collecting, cleaning, and analyzing data to uncover hidden insights. It helps businesses make better decisions and gain a competitive edge.

Types of Big-Data

Big Data is generally categorized into three different varieties. They are as shown below −

- Structured Data

- Semi-Structured Data

- Unstructured Data

Let us discuss the earn type in details.

Structured Data

Structured data has a dedicated data model, a well-defined structure, and a consistent order, and is designed in such a way that it can be easily accessed and used by humans or computers. Structured data is usually stored in well-defined tabular form means in the form of rows and columns. Example: MS Excel, Database Management Systems (DBMS)

Semi-Structured Data

Semi-structured data can be described as another type of structured data. It inherits some qualities from Structured Data; however, the majority of this type of data lacks a specific structure and does not follow the formal structure of data models such as an RDBMS. Example: Comma Separated Values (CSV) File.

Unstructured Data

Unstructured data is a type of data that doesn’t follow any structure. It lacks a uniform format and is constantly changing. However, it may occasionally include data and time-related information. Example: Audio Files, Images etc.

Types of Big Data Analytics

Some common types of Big Data analytics are as −

Descriptive Analytics

Descriptive analytics gives a result like “What is happening in my business?" if the dataset is business-related. Overall, this summarises prior facts and aids in the creation of reports such as a company's income, profit, and sales figures. It also aids the tabulation of social media metrics. It can do comprehensive, accurate, live data and effective visualisation.

Diagnostic Analytics

Diagnostic analytics determines root causes from data. It answers like “Why is it happening?” Some common examples are drill-down, data mining, and data recovery. Organisations use diagnostic analytics because they provide an in-depth insight into a particular problem. Overall, it can drill down the root causes and ability to isolate all confounding information.

For example − A report from an online store says that sales have decreased, even though people are still adding items to their shopping carts. Several things could have caused this, such as the form not loading properly, the shipping cost being too high, or not enough payment choices being offered. You can use diagnostic data to figure out why this is happening.

Predictive Analytics

This kind of analytics looks at data from the past and the present to guess what will happen in the future. Hence, it answers like “What will be happening in future? “Data mining, AI, and machine learning are all used in predictive analytics to look at current data and guess what will happen in the future. It can figure out things like market trends, customer trends, and so on.

For example − The rules that Bajaj Finance has to follow to keep their customers safe from fake transactions are set by PayPal. The business uses predictive analytics to look at all of its past payment and user behaviour data and come up with a program that can spot fraud.

Prescriptive Analytics

Perspective analytics gives the ability to frame a strategic decision, the analytical results answer “What do I need to do?” Perspective analytics works with both descriptive and predictive analytics. Most of the time, it relies on AI and machine learning.

For example − Prescriptive analytics can help a company to maximise its business and profit. For example in the airline industry, Perspective analytics applies some set of algorithms that will change flight prices automatically based on demand from customers, and reduce ticket prices due to bad weather conditions, location, holiday seasons etc.

Tools and Technologies of Big Data Analytics

Some commonly used big data analytics tools are as −

Hadoop

A tool to store and analyze large amounts of data. Hadoop makes it possible to deal with big data, It's a tool which made big data analytics possible.

MongoDB

A tool for managing unstructured data. It's a database which specially designed to store, access and process large quantities of unstructured data.

Talend

A tool to use for data integration and management. Talend's solution package includes complete capabilities for data integration, data quality, master data management, and data governance. Talend integrates with big data management tools like Hadoop, Spark, and NoSQL databases allowing organisations to process and analyse enormous amounts of data efficiently. It includes connectors and components for interacting with big data technologies, allowing users to create data pipelines for ingesting, processing, and analysing large amounts of data.

Cassandra

A distributed database used to handle chunks of data. Cassandra is an open-source distributed NoSQL database management system that handles massive amounts of data over several commodity servers, ensuring high availability and scalability without sacrificing performance.

Spark

Used for real-time processing and analyzing large amounts of data. Apache Spark is a robust and versatile distributed computing framework that provides a single platform for big data processing, analytics, and machine learning, making it popular in industries such as e-commerce, finance, healthcare, and telecommunications.

Storm

It is an open-source real-time computational system. Apache Storm is a robust and versatile stream processing framework that allows organisations to process and analyse real-time data streams on a large scale, making it suited for a wide range of use cases in industries such as banking, telecommunications, e-commerce, and IoT.

Kafka

It is a distributed streaming platform that is used for fault-tolerant storage. Apache Kafka is a versatile and powerful event streaming platform that allows organisations to create scalable, fault-tolerant, and real-time data pipelines and streaming applications to efficiently meet their data processing requirements.