- Big Data Analytics Tutorial

- Big Data Analytics - Home

- Big Data Analytics - Overview

- Big Data Analytics - Characteristics

- Big Data Analytics - Data Life Cycle

- Big Data Analytics - Architecture

- Big Data Analytics - Methodology

- Big Data Analytics - Core Deliverables

- Big Data Adoption & Planning Considerations

- Big Data Analytics - Key Stakeholders

- Big Data Analytics - Data Analyst

- Big Data Analytics - Data Scientist

- Big Data Analytics Project

- Data Analytics - Problem Definition

- Big Data Analytics - Data Collection

- Big Data Analytics - Cleansing data

- Big Data Analytics - Summarizing

- Big Data Analytics - Data Exploration

- Big Data Analytics - Data Visualization

- Big Data Analytics Methods

- Big Data Analytics - Introduction to R

- Data Analytics - Introduction to SQL

- Big Data Analytics - Charts & Graphs

- Big Data Analytics - Data Tools

- Data Analytics - Statistical Methods

- Advanced Methods

- Machine Learning for Data Analysis

- Naive Bayes Classifier

- K-Means Clustering

- Association Rules

- Big Data Analytics - Decision Trees

- Logistic Regression

- Big Data Analytics - Time Series

- Big Data Analytics - Text Analytics

- Big Data Analytics - Online Learning

- Big Data Analytics Useful Resources

- Big Data Analytics - Quick Guide

- Big Data Analytics - Resources

- Big Data Analytics - Discussion

Big Data Analytics - Data Life Cycle

A life cycle is a process which denotes a sequential flow of one or more activities involved in Big Data Analytics. Before going to learn about a big data analytics life cycle; let’s understand the traditional data mining life cycle.

Traditional Data Mining Life Cycle

To provide a framework to organize the work systematically for an organization; the framework supports the entire business process and provides valuable business insights to make strategic decisions to survive the organisation in a competitive world as well as maximize its profit.

The Traditional Data Mining Life Cycle includes the following phases −

- Problem Definition − It’s an initial phase of the data mining process; it includes problem definitions that need to be uncovered or solved. A problem definition always includes business goals that need to be achieved and the data that need to be explored to identify patterns, business trends, and process flow to achieve the defined goals.

- Data Collection − The next step is data collection. This phase involves data extraction from different sources like databases, weblogs, or social media platforms that are required for analysis and to do business intelligence. Collected data is considered raw data because it includes impurities and may not be in the required formats and structures.

- Data Pre-processing − After data collection, we clean it and pre-process it to remove noises, missing value imputation, data transformation, feature selection, and convert data into a required format before you can begin your analysis.

- Data Exploration and Visualization − Once pre-processing is done on data, we explore it to understand its characteristics, and identify patterns and trends. This phase also includes data visualizations using scatter plots, histograms, or heat maps to show the data in graphical form.

- Modelling − This phase includes creating data models to solve realistic problems defined in Phase 1. This could include an effective machine learning algorithm; training the model, and assessing its performance.

- Evaluation − The final stage in data mining is to assess the model's performance and determine if it matches your business goals in step 1. If the model is underperforming, you may need to do data exploration or feature selection once again.

CRISP-DM Methodology

The CRISP-DM stands for Cross Industry Standard Process for Data Mining; it is a methodology which describes commonly used approaches that a data mining expert uses to tackle problems in traditional BI data mining. It is still being used in traditional BI data mining teams. The following figure illustrates it. It describes the major phases of the CRISP-DM cycle and how they are interrelated with one another

CRISP-DM was introduced in 1996 and the next year, it got underway as a European Union project under the ESPRIT funding initiative. The project was led by five companies: SPSS, Teradata, Daimler AG, NCR Corporation, and OHRA (an insurance company). The project was finally incorporated into SPSS.

Phases of CRISP-DM Life Cycle | Steps of CRISP-DM Life Cycle

- Business Understanding − This phase includes problem definition, project objectives and requirements from a business perspective, and then converts it into data mining. A preliminary plan is designed to achieve the objectives.

- Data Understanding − The data understanding phase initially starts with data collection, to identify data quality, discover data insights, or detect interesting subsets to form hypotheses for hidden information.

- Data Preparation − The data preparation phase covers all activities to construct the final dataset (data that will be fed into the modelling tool(s)) from the initial raw data. Data preparation tasks are likely to be performed multiple times, and not in any prescribed order. Tasks include table, record, and attribute selection as well as transformation and cleaning of data for modelling tools.

- Modelling − In this phase, different modelling techniques are selected and applied; different techniques may be available to process the same type of data; an expert always opts for effective and efficient ones.

- Evaluation − Once the proposed model is completed; before the final deployment of the model, it is important to evaluate it thoroughly and review the steps executed to construct the model, to ensure that the model achieves the desired business objectives.

- Deployment − The creation of the model is generally not the end of the project. Even if the purpose of the model is to increase knowledge of the data, the knowledge gained will need to be organized and presented in a way that is useful to the customer.In many cases, it will be the customer, not the data analyst, who will carry out the deployment phase. Even if the analyst deploys the model, the customer needs to understand upfront the actions which will need to be carried out to make use of the created models.

SEMMA Methodology

SEMMA is another methodology developed by SAS for data mining modelling. It stands for Sample, Explore, Modify, Model, and Asses.

The description of its phases is as follows −

- Sample − The process starts with data sampling, e.g., selecting the dataset for modelling. The dataset should be large enough to contain sufficient information to retrieve, yet small enough to be used efficiently. This phase also deals with data partitioning.

- Explore − This phase covers the understanding of the data by discovering anticipated and unanticipated relationships between the variables, and also abnormalities, with the help of data visualization.

- Modify − The Modify phase contains methods to select, create and transform variables in preparation for data modelling.

- Model − In the Model phase, the focus is on applying various modelling (data mining) techniques on the prepared variables to create models that possibly provide the desired outcome.

- Assess − The evaluation of the modelling results shows the reliability and usefulness of the created models.

The main difference between CRISM–DM and SEMMA is that SEMMA focuses on the modelling aspect, whereas CRISP-DM gives more importance to stages of the cycle before modelling such as understanding the business problem to be solved, understanding and pre-processing the data to be used as input, for example, machine learning algorithms.

Big Data Life Cycle

Big Data Analytics is a field that involves managing the entire data lifecycle, including data collection, cleansing, organisation, storage, analysis, and governance. In the context of big data, the traditional approaches were not optimal for analysing large-volume data, data with different values, data velocity etc.

For example, the SEMMA methodology disdains data collection and pre-processing of different data sources. These stages normally constitute most of the work in a successful big data project. Big Data analytics involves the identification, acquisition, processing, and analysis of large amounts of raw data, unstructured and semi-structured data which aims to extract valuable information for trend identification, enhancing existing company data, and conducting extensive searches.

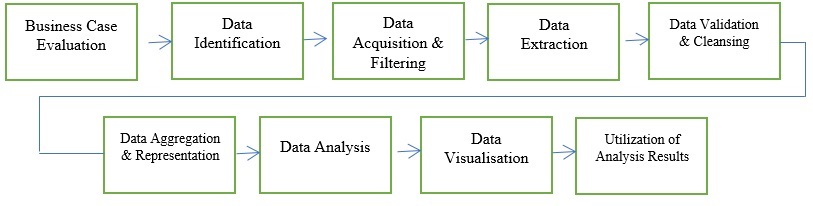

The Big Data analytics lifecycle can be divided into the following phases −

- Business Case Evaluation

- Data Identification

- Data Acquisition & Filtering

- Data Extraction

- Data Validation & Cleansing

- Data Aggregation & Representation

- Data Analysis

- Data Visualization

- Utilization of Analysis Results

The primary differences between Big Data Analytics and traditional data analysis are in the value, velocity, and variety of data processed. To address the specific requirements for big data analysis, an organised method is required. The description of Big Data analytics lifecycle phases are as follows −

Business Case Evaluation

A Big Data analytics lifecycle begins with a well-defined business case that outlines the problem identification, objective, and goals for conducting the analysis. Before beginning the real hands-on analytical duties, the Business Case Evaluation needs the creation, assessment, and approval of a business case.

An examination of a Big Data analytics business case gives a direction to decision-makers to understand the business resources that will be required and business problems that need to be addressed. The case evaluation examines whether the business problem definition being addressed is truly a Big Data problem.

Data Identification

The data identification phase focuses on identifying the necessary datasets and their sources for the analysis project. Identifying a larger range of data sources may improve the chances of discovering hidden patterns and relationships. The firm may require internal or external datasets and sources, depending on the nature of the business problems it is addressing.

Data Acquisition and Filtering

The data acquisition process entails gathering data from all of the sources mentioned in the previous phase. We subjected the data to automated filtering to remove corrupted data or records irrelevant to the study objectives. Depending on the type of data source, data might come as a collection of files, such as data acquired from a third-party data provider, or as API integration, such as with Twitter.

Once generated or entering the enterprise boundary, we must save both internal and external data. We save this data to disk and then analyse it using batch analytics. In real-time analytics, we first analyse the data before saving it to disc.

Data Extraction

This phase focuses on extracting disparate data and converting it into a format that the underlying Big Data solution can use for data analysis.

Data Validation and Cleansing

Incorrect data can bias and misrepresent analytical results. Unlike typical enterprise data, which has a predefined structure and is verified can feed for analysis; Big Data analysis can be unstructured if data is not validated before analysis. Its intricacy can make it difficult to develop a set of appropriate validation requirements. Data Validation and Cleansing is responsible for defining complicated validation criteria and deleting any known faulty data.

Data Aggregation and Representation

The Data Aggregation and Representation phase focuses on combining multiple datasets to create a cohesive view. Performing this stage can get tricky due to variances in −

Data Structure − The data format may be the same, but the data model may differ.

Semantics − A variable labelled differently in two datasets may signify the same thing, for example, "surname" and "last name."

Data Analysis

The data analysis phase is responsible for carrying out the actual analysis work, which usually comprises one or more types of analytics. Especially if the data analysis is exploratory, we can continue this stage iteratively until we discover the proper pattern or association.

Data Visualization

The Data Visualization phase visualizes data graphically to communicate outcomes for effective interpretation by business users.The resultant outcome helps to perform visual analysis, allowing them to uncover answers to queries they have not yet formulated.

Utilization of Analysis Results

The outcomes made available to business personnel to support business decision-making, such as via dashboards.All of the mentioned nine phases are the primary phases of the Big Data Analytics life cycle.

Below mentioned phases can also be kept in consideration −

Research

Analyse what other companies have done in the same situation. This involves looking for solutions that are reasonable for your company, even though it involves adapting other solutions to the resources and requirements that your company has. In this stage, a methodology for the future stages should be defined.

Human Resources Assessment

Once the problem is defined, it’s reasonable to continue analyzing if the current staff can complete the project successfully. Traditional BI teams might not be capable of delivering an optimal solution to all the stages, so it should be considered before starting the project if there is a need to outsource a part of the project or hire more people.

Data Acquisition

This section is key in a big data life cycle; it defines which type of profiles would be needed to deliver the resultant data product. Data gathering is a non-trivial step of the process; it normally involves gathering unstructured data from different sources. To give an example, it could involve writing a crawler to retrieve reviews from a website. This involves dealing with text, perhaps in different languages normally requiring a significant amount of time to be completed.

Data Munging

Once the data is retrieved, for example, from the web, it needs to be stored in an easy-to-use format. To continue with the review examples, let's assume the data is retrieved from different sites where each has a different display of the data.

Suppose one data source gives reviews in terms of rating in stars, therefore it is possible to read this as a mapping for the response variable $\mathrm{y\:\epsilon \:\lbrace 1,2,3,4,5\rbrace}$. Another data source gives reviews using an arrow system, one for upvoting and the other for downvoting. This would imply a response variable of the form $\mathrm{y\:\epsilon \:\lbrace positive,negative \rbrace}$.

To combine both data sources, a decision has to be made to make these two response representations equivalent. This can involve converting the first data source response representation to the second form, considering one star as negative and five stars as positive. This process often requires a large time allocation to be delivered with good quality.

Data Storage

Once the data is processed, it sometimes needs to be stored in a database. Big data technologies offer plenty of alternatives regarding this point. The most common alternative is using the Hadoop File System for storage which provides users a limited version of SQL, known as HIVE Query Language. This allows most analytics tasks to be done in similar ways as would be done in traditional BI data warehouses, from the user perspective. Other storage options to be considered are MongoDB, Redis, and SPARK.

This stage of the cycle is related to the human resources knowledge in terms of their abilities to implement different architectures. Modified versions of traditional data warehouses are still being used in large-scale applications. For example, Teradata and IBM offer SQL databases that can handle terabytes of data; open-source solutions such as PostgreSQL and MySQL are still being used for large-scale applications.

Even though there are differences in how the different storages work in the background, from the client side, most solutions provide an SQL API. Hence having a good understanding of SQL is still a key skill to have for big data analytics. This stage a priori seems to be the most important topic, in practice, this is not true. It is not even an essential stage. It is possible to implement a big data solution that would work with real-time data, so in this case, we only need to gather data to develop the model and then implement it in real-time. So there would not be a need to formally store the data at all.

Exploratory Data Analysis

Once the data has been cleaned and stored in a way that insights can be retrieved from it, the data exploration phase is mandatory. The objective of this stage is to understand the data, this is normally done with statistical techniques and also plotting the data. This is a good stage to evaluate whether the problem definition makes sense or is feasible.

Data Preparation for Modeling and Assessment

This stage involves reshaping the cleaned data retrieved previously and using statistical preprocessing for missing values imputation, outlier detection, normalization, feature extraction and feature selection.

Modelling

The prior stage should have produced several datasets for training and testing, for example, a predictive model. This stage involves trying different models and looking forward to solving the business problem at hand. In practice, it is normally desired that the model would give some insight into the business. Finally, the best model or combination of models is selected evaluating its performance on a left-out dataset.

Implementation

In this stage, the data product developed is implemented in the data pipeline of the company. This involves setting up a validation scheme while the data product is working, to track its performance. For example, in the case of implementing a predictive model, this stage would involve applying the model to new data and once the response is available, evaluate the model.